Introducing ngrok-rs - safe and portable network ingress to your Rust apps 🦀

Today, we're excited to announce ngrok-rs, our native and idiomatic crate for adding secure ingress directly into your Rust apps 🦀. If you’ve used ngrok in the past, you can think of ngrok-rs as the ngrok agent packaged as a Rust crate. ngrok-rs is open source on GitHub, with docs available on docs.rs, and a getting started guide in ngrok docs.

ngrok-rs lets developers serve Rust services on the internet in a single statement without setting up low-level network primitives like IPs, NAT, certificates, load balancers, and even ports! Applications using ngrok-rs listen on ngrok’s global ingress network for HTTP or TCP traffic. ngrok-rs listeners are usable with hyper Servers, and connections implement tokio’s AsyncRead and AsyncWrite traits. This makes it easy to add ngrok-rs into any application that’s built on hyper, such as the popular axum HTTP framework.

Check out how easy it is to run the hello world of axum with ngrok-rs:

use axum::{routing::get, Router};

use ngrok::prelude::*;

use std::error::Error;

#[tokio::main]

async fn main() -> Result<(), Box> {

// build our application with a route

let app = Router::new().route("/", get(|| async { "Hello World!" }));

// Listen on ngrok's global network (i.e. https://myapp.ngrok.dev)

let listener = ngrok::Session::builder()

.authtoken_from_env()

.connect()

.await?

.http_endpoint()

.listen()

.await?;

println!("Ingress URL: {:?}", listener.url());

// Instead of listening on localhost:8000 like this:

// axum::Server::bind(&"0.0.0.0:8000".parse().unwrap())

// Start the server with an ngrok listener!

axum::Server::builder(listener)

.serve(app.into_make_service())

.await?;

Ok(())

}To run this app, include the necessary dependencies, add your ngrok authtoken to the environment as <code>NGROK_AUTHTOKEN</code>, and start your app:

cargo add ngrok -F axum

cargo add axum

cargo add tokio -F rt-multi-thread -F macros

export NGROK_AUTHTOKEN=[YOUR_AUTH_TOKEN]

cargo runYour app is assigned a URL — e.g. https://myapp.ngrok.dev, available via listener.url(), and is ready to receive requests! There is no additional configuration or code required.

How ngrok-rs works

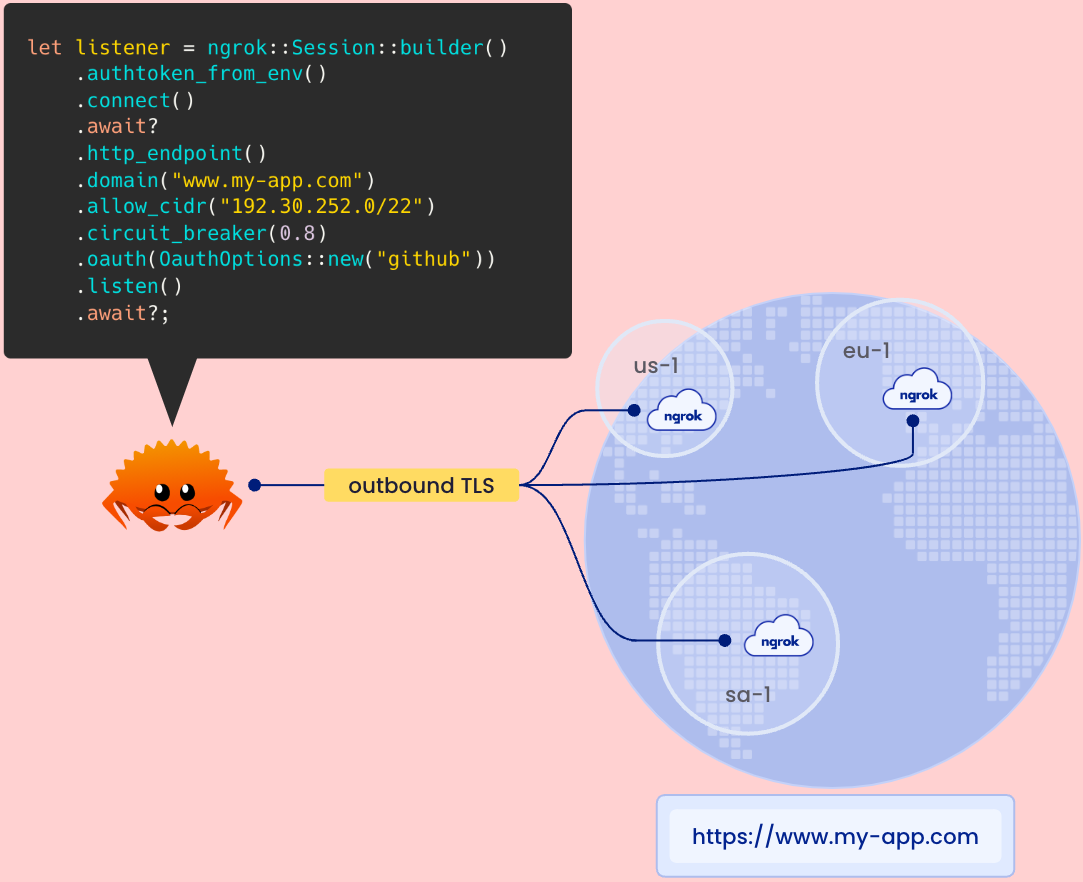

The example app above doesn’t listen on any ports, how is that possible? When you create the listener object, ngrok-rs initiates a secure and persistent outbound TLS connection to ngrok’s ingress-as-a-service platform and transmits your configuration requirements — i.e. domain, authentication, webhook verification, and IP restrictions. The ngrok service sets up your configuration across all of our global points of presence in seconds and returns a URL for your application.

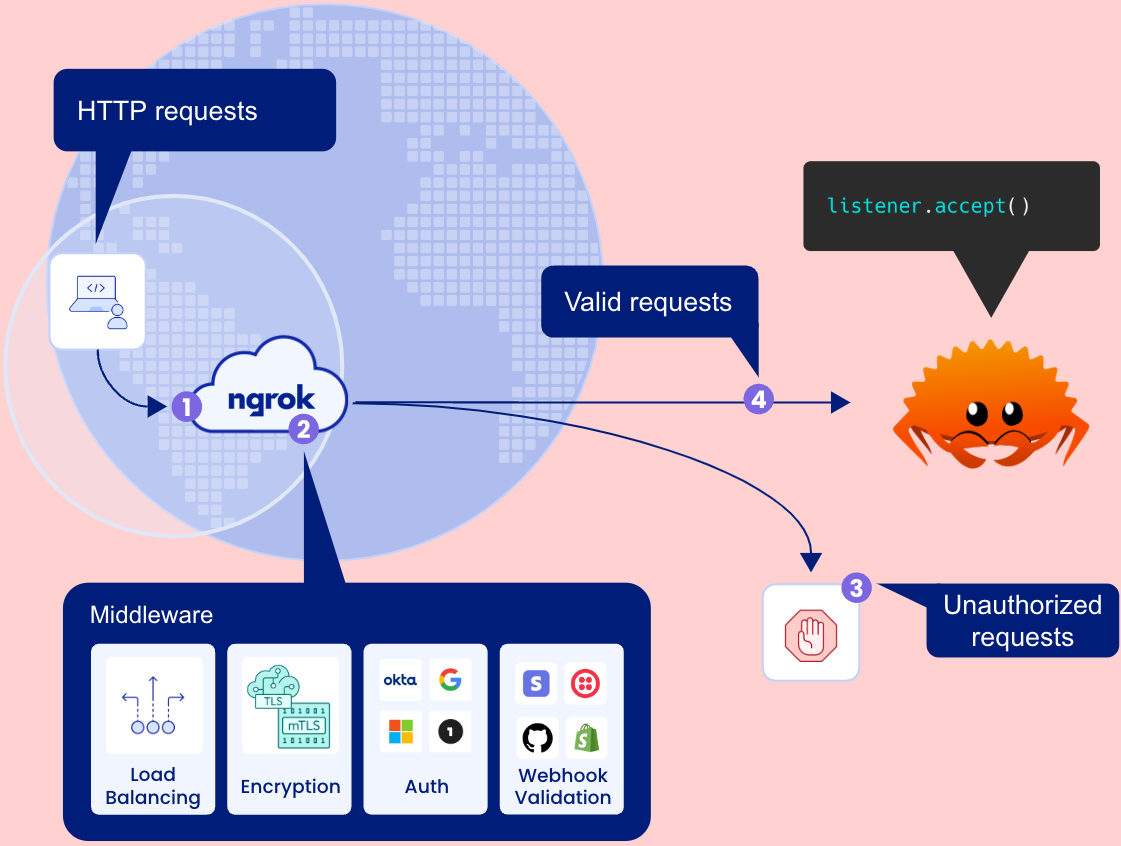

After your ingress is set up, ngrok receives HTTP requests at the closest region to the requester and enforces the middleware policies defined by your application. Unauthorized requests are blocked at the edge and only valid requests reach your ngrok-rs app via the persistent TLS connection and incoming stream:

Why we built ngrok-rs

Ingress should be a high-level abstraction

Creating ingress today is a frustrating exercise of wrangling a slew of disparate low level networking primitives. Developers must manage a number of technologies at different layers of the network stack like DNS, TLS Certificates, network-level CIDR policies, IP and subnet routing, load balancing, VPNs and NATs, just to name a few. In short, developers are being forced to work with the assembly language of networking.

We built ngrok-rs to empower Rustaceans to declare ingress policies at a high layer of abstraction without sacrificing security and control. As an example, here’s how ngrok-rs allows developers to specify ingress with declarative options by removing the burden of working with low-level details:

let listener = ngrok::Session::builder()

.authtoken_from_env()

.connect()

.await?

.http_endpoint()

.domain("my-app.ngrok.dev")

.circuit_breaker(0.5)

.compression()

.deny_cidr("200.2.0.0/16")

.oauth(OauthOptions::new("google").allow_domain("acme.com"))

.listen()

.await?;A complete list of supported middleware configurations can be found in the ngrok-rs API reference.

Ingress should be environment-independent

Traditionally, ingress is tightly coupled to the environment where your app is deployed. For example, the same app deployed to your own datacenter, an EC2 instance, or a Kubernetes cluster requires wildly different networking setups. Running your app in those three different environments means you need to manage ingress in three different ways.

ngrok-rs decouples your app’s ingress from the environment where it runs.

When your application uses ngrok-rs, you can run it anywhere and it will receive traffic the same way. From an ingress standpoint, your application becomes portable: it does not matter whether it runs on bare metal, VMs, AWS, Azure, in Kubernetes, serverless platforms like Heroku or Render, a racked data-center server, a Raspberry Pi, or on your laptop.

Ingress shouldn’t require sidecars

Developers often distribute the ngrok agent alongside their own applications to create ingress for their IoT devices, SaaS offerings, and CI/CD pipelines. It can be challenging to bundle and manage the ngrok agent as a sidecar process. ngrok-rs eliminates the agent, simplifying distribution and management as well as enabling developers to easily deliver private label experiences.

How we designed ngrok-rs

We designed ngrok-rs with the goal of integrating seamlessly into the Rust ecosystem.

- Tunnels are TcpListeners: Tunnels in ngrok-rs act much like the

TcpListenertypes from either tokio or async-std. They provide implementations ofStreamto iterate over incoming connections, and hyper’sAcceptfor use as the connection backend to itsServer. - Connections are byte streams: Connections over ngrok-rs implement tokio’s

AsyncReadandAsyncWritetraits, allowing them to be used by any code expecting generic byte streams. Additionally, they implement axum’sConnectedtrait, which allows connection types to provide additional context to its handlers, such as the remote address for the request. - Built-in forwarding: In addition to handling connections in-process, ngrok-rs can work like the ngrok agent to forward connections to services listening on local ports.

- Integrated tracing: For logging integration, ngrok-rs uses the

tracingframework, and its internal methods use theinstrumentmacro for automatic span creation. - FFI-Friendly: Aside from Rust-first use cases, we’re also leveraging Rust’s excellent FFI support to power our next generation of ngrok SDKs, such as ngrok-js, ngrok-py, and ngrok-java. By sharing the base implementation, we’re able to get the most bang-for-our-buck when it comes to making security fixes and performance improvements.

We validated ngrok-rs' design by collecting feedback from the community and fellow Rustaceans on our library design and ergonomics. We're also using ngrok-rs to build ngrok SDKs for other languages including JavaScript, Python, and Java.

What about other programming languages?

They’re coming! At the present moment (Mar. 2023), in addition to Rust, we support a language-native SDK for Go (ngrok-go).

We're leveraging Rust to build our next generation of ngrok SDKs. JavaScript and Python are in active development and you can follow along on GitHub. Expect other languages like C#, Java, and Ruby to follow. Tell us which language to prioritize next.

Get started

Getting started is as easy as running cargo add ngrok -F axum in your Rust app. Then check out the ngrok-rs crate docs, our getting started with ngrok-rs guide, and the open source ngrok-rs repo on GitHub.